Travelling Salesman Problem: Python, C++ Algorithm

What is the Travelling Salesman Problem (TSP)?

Travelling Salesman Problem (TSP) is a classic combinatorics problem of theoretical computer science. The problem asks to find the shortest path in a graph with the condition of visiting all the nodes only one time and returning to the origin city.

The problem statement gives a list of cities along with the distances between each city.

Objective: To start from the origin city, visit other cities only once, and return to the original city again. Our target is to find the shortest possible path to complete the round-trip route.

Example of TSP

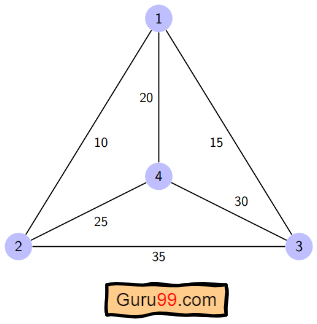

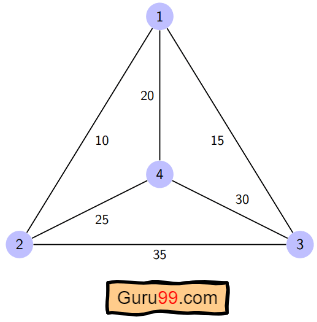

Here a graph is given where 1, 2, 3, and 4 represent the cities, and the weight associated with every edge represents the distance between those cities.

The goal is to find the shortest possible path for the tour that starts from the origin city, traverses the graph while only visiting the other cities or nodes once, and returns to the origin city.

For the above graph, the optimal route is to follow the minimum cost path: 1-2-4-3-1. And this shortest route would cost 10+25+30+15 =80

Different Solutions to Travelling Salesman Problem

Travelling Salesman Problem (TSP) is classified as a NP-hard problem due to having no polynomial time algorithm. The complexity increases exponentially by increasing the number of cities.

There are multiple ways to solve the traveling salesman problem (tsp). Some popular solutions are:

The brute force approach is the naive method for solving traveling salesman problems. In this approach, we first calculate all possible paths and then compare them. The number of paths in a graph consisting of n cities is n! It is computationally very expensive to solve the traveling salesman problem in this brute force approach.

The branch-and-bound method: The problem is broken down into sub-problems in this approach. The solution of those individual sub-problems would provide an optimal solution.

This tutorial will demonstrate a dynamic programming approach, the recursive version of this branch-and-bound method, to solve the traveling salesman problem.

Dynamic programming is such a method for seeking optimal solutions by analyzing all possible routes. It is one of the exact solution methods that solve traveling salesman problems through relatively higher cost than the greedy methods that provide a near-optimal solution.

The computational complexity of this approach is O(N^2 * 2^N) which is discussed later in this article.

The nearest neighbor method is a heuristic-based greedy approach where we choose the nearest neighbor node. This approach is computationally less expensive than the dynamic approach. But it does not provide the guarantee of an optimal solution. This method is used for near-optimal solutions.

Algorithm for Traveling Salesman Problem

We will use the dynamic programming approach to solve the Travelling Salesman Problem (TSP).

Before starting the algorithm, let’s get acquainted with some terminologies:

- A graph G=(V, E), which is a set of vertices and edges.

- V is the set of vertices.

- E is the set of edges.

- Vertices are connected through edges.

- Dist(i,j) denotes the non-negative distance between two vertices, i and j.

Let’s assume S is the subset of cities and belongs to {1, 2, 3, …, n} where 1, 2, 3…n are the cities and i, j are two cities in that subset. Now cost(i, S, j) is defined in such a way as the length of the shortest path visiting node in S, which is exactly once having the starting and ending point as i and j respectively.

For example, cost (1, {2, 3, 4}, 1) denotes the length of the shortest path where:

- Starting city is 1

- Cities 2, 3, and 4 are visited only once

- The ending point is 1

The dynamic programming algorithm would be:

- Set cost(i, , i) = 0, which means we start and end at i, and the cost is 0.

- When |S| > 1, we define cost(i, S, 1) = ∝ where i !=1 . Because initially, we do not know the exact cost to reach city i to city 1 through other cities.

- Now, we need to start at 1 and complete the tour. We need to select the next city in such a way-

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j) where i∈S and i≠j

For the given figure, the adjacency matrix would be the following:

| dist(i,j) | 1 | 2 | 3 | 4 |

| 1 | 0 | 10 | 15 | 20 |

| 2 | 10 | 0 | 35 | 25 |

| 3 | 15 | 35 | 0 | 30 |

| 4 | 20 | 25 | 30 | 0 |

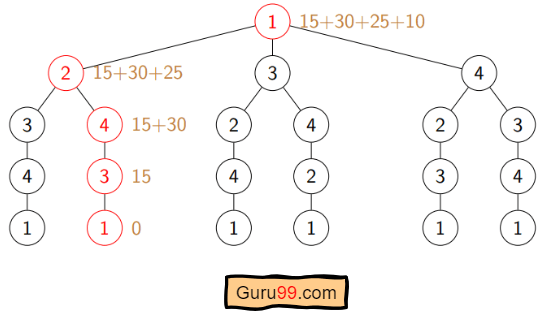

Let’s see how our algorithm works:

Step 1) We are considering our journey starting at city 1, visit other cities once and return to city 1.

Step 2) S is the subset of cities. According to our algorithm, for all |S| > 1, we will set the distance cost(i, S, 1) = ∝. Here cost(i, S, j) means we are starting at city i, visiting the cities of S once, and now we are at city j. We set this path cost as infinity because we do not know the distance yet. So the values will be the following:

Cost (2, {3, 4}, 1) = ∝ ; the notation denotes we are starting at city 2, going through cities 3, 4, and reaching 1. And the path cost is infinity. Similarly-

cost(3, {2, 4}, 1) = ∝

cost(4, {2, 3}, 1) = ∝

Step 3) Now, for all subsets of S, we need to find the following:

cost(i, S, j)=min cost (i, S−{i}, j)+dist(i,j), where j∈S and i≠j

That means the minimum cost path for starting at i, going through the subset of cities once, and returning to city j. Considering that the journey starts at city 1, the optimal path cost would be= cost(1, {other cities}, 1).

Let’s find out how we could achieve that:

Now S = {1, 2, 3, 4}. There are four elements. Hence the number of subsets will be 2^4 or 16. Those subsets are-

1) |S| = Null:

{Φ}

2) |S| = 1:

{{1}, {2}, {3}, {4}}

3) |S| = 2:

{{1, 2}, {1, 3}, {1, 4}, {2, 3}, {2, 4}, {3, 4}}

4) |S| = 3:

{{1, 2, 3}, {1, 2, 4}, {2, 3, 4}, {1, 3, 4}}

5) |S| = 4:

{{1, 2, 3, 4}}

As we are starting at 1, we could discard the subsets containing city 1.

The algorithm calculation:

1) |S| = Φ:

cost (2, Φ, 1) = dist(2, 1) = 10

cost (3, Φ, 1) = dist(3, 1) = 15

cost (4, Φ, 1) = dist(4, 1) = 20

2) |S| = 1:

cost (2, {3}, 1) = dist(2, 3) + cost (3, Φ, 1) = 35+15 = 50

cost (2, {4}, 1) = dist(2, 4) + cost (4, Φ, 1) = 25+20 = 45

cost (3, {2}, 1) = dist(3, 2) + cost (2, Φ, 1) = 35+10 = 45

cost (3, {4}, 1) = dist(3, 4) + cost (4, Φ, 1) = 30+20 = 50

cost (4, {2}, 1) = dist(4, 2) + cost (2, Φ, 1) = 25+10 = 35

cost (4, {3}, 1) = dist(4, 3) + cost (3, Φ, 1) = 30+15 = 45

3) |S| = 2:

cost (2, {3, 4}, 1) = min [ dist[2,3]+Cost(3,{4},1) = 35+50 = 85,

dist[2,4]+Cost(4,{3},1) = 25+45 = 70 ] = 70

cost (3, {2, 4}, 1) = min [ dist[3,2]+Cost(2,{4},1) = 35+45 = 80,

dist[3,4]+Cost(4,{2},1) = 30+35 = 65 ] = 65

cost (4, {2, 3}, 1) = min [ dist[4,2]+Cost(2,{3},1) = 25+50 = 75

dist[4,3]+Cost(3,{2},1) = 30+45 = 75 ] = 75

4) |S| = 3:

cost (1, {2, 3, 4}, 1) = min [ dist[1,2]+Cost(2,{3,4},1) = 10+70 = 80

dist[1,3]+Cost(3,{2,4},1) = 15+65 = 80

dist[1,4]+Cost(4,{2,3},1) = 20+75 = 95 ] = 80

So the optimal solution would be 1-2-4-3-1

Pseudo-code

Algorithm: Traveling-Salesman-Problem

Cost (1, {}, 1) = 0

for s = 2 to n do

for all subsets S belongs to {1, 2, 3, … , n} of size s

Cost (s, S, 1) = Infinity

for all i Є S and i ≠ 1

Cost (i, S, j) = min {Cost (i, S – {i}, j) + dist(i, j) for j Є S and i ≠ j}

Return min(i) Cost (i, {1, 2, 3, …, n}, j) + d(j, i)

Implementation in C/C++

Here’s the implementation in C++:

#include <bits/stdc++.h>

using namespace std;

#define V 4

#define MAX 1000000

int tsp(int graph[][V], int s)

{

vector<int> vertex;

for (int i = 0; i < V; i++)

if (i != s)

vertex.push_back(i);

int min_cost = MAX;

while(next_permutation(vertex.begin(), vertex.end()))

{

int current_cost = 0;

int j = s;

for (int i = 0; i < vertex.size(); i++) {

current_cost += graph[j][vertex[i]];

j = vertex[i];

}

current_cost += graph[j][s];

min_cost = min(min_cost, current_cost);

return min_cost;

}

}

int main()

{

int graph[][V] = { { 0, 10, 15, 20 },{ 10, 0, 35, 25 },{ 15, 35, 0, 30 },{ 20, 25, 30, 0 } };

int s = 0;

cout << tsp(graph, s) << endl;

return 0;

}

Output:

80

Implementation in Python

from sys import maxsize

from itertools, import permutations

V = 4

def tsp(graph, s):

vertex = []

for i in range(V):

if i != s:

vertex.append(i)

min_cost = maxsize

next_permutation=permutations(vertex)

for i in next_permutation:

current_cost = 0

k = s

for j in i:

current_cost += graph[k][j]

k = j

current_cost += graph[k][s]

min_cost = min(min_cost, current_cost)

return min_cost

graph = [[0, 10, 15, 20], [10, 0, 35, 25], [15, 35, 0, 30], [20, 25, 30, 0]]

s = 0

print(tsp(graph, s))

Output:

80

Academic Solutions to TSP

Computer scientists have spent years searching for an improved polynomial time algorithm for the Travelling Salesman Problem. Until now, the problem is still NP-hard.

Though some of the following solutions were published in recent years that have reduced the complexity to a certain degree:

- The classical symmetric TSP is solved by the Zero Suffix Method.

- The Biogeography‐based Optimization Algorithm is based on the migration strategy to solve the optimization problems that can be planned as TSP.

- Multi-Objective Evolutionary Algorithm is designed for solving multiple TSP based on NSGA-II.

- The Multi-Agent System solves the TSP of N cities with fixed resources.

Application of Traveling Salesman Problem

Travelling Salesman Problem (TSP) is applied in the real world in both its purest and modified forms. Some of those are:

- Planning, logistics, and manufacturing microchips: Chip insertion problems naturally arise in the microchip industry. Those problems can be planned as traveling salesman problems.

- DNA sequencing: Slight modification of the traveling salesman problem can be used in DNA sequencing. Here, the cities represent the DNA fragments, and the distance represents the similarity measure between two DNA fragments.

- Astronomy: The Travelling Salesman Problem is applied by astronomers to minimize the time spent observing various sources.

- Optimal control problem: Travelling Salesman Problem formulation can be applied in optimal control problems. There might be several other constraints added.

Complexity Analysis of TSP

- Time Complexity: There are a total of 2N subproblems for each node. So the total number of subproblems would be N*2N. Each of the sub-problems requires linear time to solve. If the origin node is not specified, we have to run loops for N nodes.

So the total time complexity for an optimal solution would be the Number of nodes * Number of subproblems * time to solve each sub-problem. The time complexity can be defined as O(N2 * 2^N).

- Space Complexity: The dynamic programming approach uses memory to store C(S, i), where S is a subset of the vertices set. There is a total of 2N subsets for each node. So, the space complexity is O(2^N).

Next, you’ll learn about Sieve of Eratosthenes Algorithm