SAP DS (Data Services) in HANA

What is SAP Data services?

SAP Data services is an ETL tool which gives a single enterprises level solution for data integration, Transformation, Data quality, Data profiling and text data processing from the heterogeneous source into a target database or data warehouse.

We can create applications (job) in which data mapping and transformation can be done by using the Designer. (Latest version of SAP BODS is 4.2).

Features of Data Services

- It provides high-performance parallel transformations.

- It has comprehensive administrative tools and reporting tool.

- It supports multi-users.

- SAP BODS is very flexible with web-service based application.

- It allows scripting language with rich sets of functions.

- Data Services can integrate with SAP LT Replication Server (SLT) , with the Trigger-based technology. SLT adds delta-capabilities to every SAP or non-SAP source table, Which allows for using data capturing for change and transferring the delta data of the Source table.

- Data validation with dashboards and process auditing.

- Administration tool with scheduling capabilities and monitoring/dashboards.

- Debugging and built-in profiling and viewing data.

- SAP BODS support Broad source and target.

- Any Applications (e.g. SAP).

- Any Databases with bulk loading and changes data capture

- Files: fixed width, comma delimited, COBOL, XML, Excel.

Component of Data Services

SAP DATA services have below component –

- Designer – It is a development tool by which we can create, test, and execute a job that populates a data warehouse.It allows the developer to create objects and configure them by selecting an icon in a source-to-target flow diagram.It can be used to create an application by specifying workflows and data flows.To Open Data Service Designer go to Start Menu -> All Programs -> SAP Data Services (4.2 here) -> Data Service Designer.

- Job Server- It is an application that launches the data services processing engine and serves as an interface to the engine and Data Services Suite.

- Engine- Data Service engine executes individual jobs which are defined in the application.

- Repository- Repository is a database that stores designer predefine objects and user defined objects (source and target metadata, transformation rules).Repository are of two types –

- Local Repository (Used by Designer and Job Server).

- Central Repository ( Used for object sharing and version control)

- Access Server- Access server passes messages between web applications, data services job server and engines.

- Administrator- Web Administrator provides browser-based administration of data services resources detail is as below –

- Configuring, starting and stopping real-time services.

- Scheduling, monitoring and executing batch jobs.

- Configuring Job Server, Access Server, and Repository usage.

- Managing users.

- Publishing batch jobs and real-time services via Web services

- Configuring and managing adapters.

Data Services Architecture –

Data Services architecture have the following component-

- Central Repository – it is used for repositories configurations to jobs servers, security management, version control and object sharing

- Designer – Used for Create Project, Job, workflow, data flow and run.

- Local repository (here you could create change and start jobs, Workflow, dataflow).

- Job server & engine – It manage the jobs.

- Access Server – It is used to execute the real-time jobs created by developers in the repositories.

In below image, Data Services and there component relationship is shown.

SAP BODS Architecture

Designer Window Detail: First we look into the first component of SAP data service- Designer

Detail of each section of data service designer is as below-

- Tool Bar (Used for Open, Save, Back, Validate, Execute, etc.).

- Project Area (Contains the current project, which includes Job, Workflow, and Dataflow. In Data Services, all entities are objects.

- Work Space (The Application Window area in which we define, display, and modify objects).

- Local Object Library (It contains local repository objects, such as transforms, job, workflow, dataflow, etc.).

- Tool Palette (Buttons on tool palette enable you to add new objects to the workspace).

Object Hierarchy

The below diagram shows hierarchical relationships for the key object types within Data Services.

Note:

Workflows and Conditional* are optional

Objects used in SAP Data services detail is as below –

| Objects | Description |

|---|---|

| Project | A project is a highest-level object in the Designer window. Projects provideyou with a way to organize the other objects you create in Data Services.Only one project is open at a time (where “open” means “visible in the projectarea”). |

| Job | A “job” is the smallest unit of work that you can schedule independently forexecution. |

| Scripts | A subset of lines in a procedure. |

| Workflow | A “work flow” is the incorporation of several data flows into a coherentflow of work for an entire job. Workflow is optional. Workflow is a procedure.

|

| Dataflow | A “data flow” is the process by which source data is transformed intotarget data. A data flow is a reusable object. It is always called from a work flow or a job.

|

| Datastore | Logical channel that connects Data Services to source and Datastore target databases.

|

| Target | Table or file in which Data Services loads data from the source. |

Data Services example by load data from SAP Source Table

Everything in Data services is an object. We need to separate data store for each source and target database.

Steps for loading data from SAP source table – SAP BODS have many steps, in which we need to create a data store for source and target and map to them.

- Create Data Store between Source and BODS

- Import the metadata (Structures) to BODS.

- Configure Import Server

- Import the metadata to HANA system.

- Create Data Store between BODS to HANA.

- Create Project.

- Create Job (Batch/Real time)

- Create Work Flow

- Create Data Flow

- Add Object in Dataflow

- Execute the job

- Check the Data Preview in HANA

Step 1) Create Data Store between SAP Source and BODS

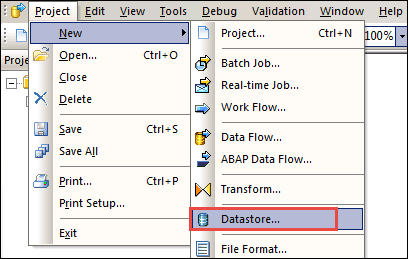

- To Load data from SAP Source to SAP HANA through SAP BODS, we need a data Store. So we create a Data store first as shown below –Project -> New – > Data Store

- A pop-up for Create new data store will appear, enter detail as below –

- Enter data store name “ds_ecc”.

- Select Data store type name as “SAP Applications”.

- Enter database server name

- User name and password.

- Click on “Apply” button.

- Click on “OK” button.

- Data Store will be created and view the created datastore as below-

- Go to Local Object Library

- Select DataStore tab.

- Data store “ds_ecc” will be displayed.

Step 2) Import Metadata (Structure) to BODS Server.

We have created a data store for ECC to BODS; now we import metadata from ECC into BODS. To import follow below steps –

- Select Datastore “ds_ecc” and right click.

- Select Import by Name option.

A pop-up for Import by Name will be displayed. Enter detail as below –

- Select Type as a table.

- Enter Name of Table, which we want to import. Here we are importing KNA1 table.

- Click On “Import” Button. KNA1 table will appear under table node of “ds_ecc” data source.

Table Metadata will be imported, in datastore ds_ecc as below –

Step 3) Configure Import Server

Till now we have imported table to data stored “ds_ecc” created for ECC to SAP BODS Connection. To import data into SAP HANA, we need to configure Import server,

- To do this go to Quick View-> Configure Import Server as below –

- A pop-up for Select System will appear, Select SAP HANA (HDB here) System as below-

- Click on “Next” button. Another Pop-Up for data service credential will appear, enter following details

- SAP BODS server Address (here BODS:6400 )

- Enter SAP BODS Repository Name ( HANAUSER Repositery Name )

- Enter ODBC Data Source (ZTDS_DS).

- Enter Default port for SAP BODS server(8080).

Click on “Finish” button.

Step 4) Import the metadata to HANA System

1. Till now we have Configured Import Server, now we will import metadata from SAP BODS server.

- Click Import option in Quick View.

- A pop-up for Import option will be displayed. Select “Selective Import of Metadata” option.

Click on “Next “Button.

2. A pop-up for “Selective Import of Metadata” will be displayed, in which we select target System.

- Select SAP HANA System (HDB here).

Click on “Next” Button.

Step 5) Create Data Store between BODS and HANA

As we know, in BODS we need to create a separate datastore for source and target. We have already created a data store for the source, now we need to create a data store for the target (between BODS and HANA). So, we create a new data store with name”DS_BODS_HANA”.

- Go to Project -> New -> Datastore.

- A screen for Create new Datastore will appear as below.

- Enter Datastore name (DS_BODS_HANA).

- Enter Datastore type as Database.

- Enter Database type as SAP HANA.

- Select Database Version.

- Enter SAP HANA Database server name.

- Enter Port name for SAP HANA Database.

- Enter Username and password.

- Tick on “Enable automatic data transfer”.

Click on “Apply” and then “OK” button.

Data store “DS_BODS_HANA” will be displayed under datastore tab of Local Object Library as

Below-

- Now we import table in data store “DS_BODS_HANA”.

- Select data store “DS_BODS_HANA” and right click.

- Select Import By Name.

- A pop-up for Import by Name will appear as be below-

- Select Type as Table.

- Enter Name as KNA1.

- Owner will be displayed as Hanauser.

- Click on Import Button.

Table will be imported in “DS_BOD_HANA” datastore, to view data in table follow below steps –

- Click on table “KNA1” in data store “DS_BODS_HANA”.

- Data will be displayed IN TABULAR Format.

Step 6) Define Project: Project group and organize related objects. The Project can contain any number of jobs, Workflow, and dataflow.

- Go to Designer Project menu.

- Select new option.

- Select Project option.

A POP-UP for New Project Creation appears as below. Enter Project Name and Click on Create Button. It will create a project folder in our case BODS_DHK.

Step 7) Define Job: A Job is a reusable object. It contains workflows and dataflow. Jobs can be executed manually or as a schedule. To Execute BODS Process we need to define the job.

We create a Job as JOB_Customer.

- Select Project (BODS_DHK) created in step 1, Right click and select “New Batch Job”.

- Rename it to “JOB_Customer”.

Step 8) Define Workflow:

- Select Job “JOB_Customer” in project area,

- Click the workflow button on the tool palette. Click on the Black Workspace area. A workflow icon will appear in the workspace.

- Change the name of the workflow as “WF_Customer”.

Click the name of workflow, an empty view for the workflow appears in the workspace.

Step 9) Define Dataflow:

- Click On Workflow “WF_Customer”.

- Click the Dataflow button on the tool palette. Click on the Black Workspace area. A Dataflow icon will appear in the workspace.

- Change the name of the Dataflow as “DF_Customer”.

- The Dataflow also appears in the project area on the left under job name.

Step 10) Add Object in Dataflow:

Inside data flow, we can provide instruction to transform source data into the desired form for the target table.

We will see below object –

- An object for the source.

- An object for the target table.

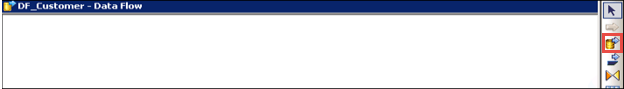

- An object for Query transform. (Query transform maps the columns from source to target.)Click on the dataflow DF_Customer . A blank workspace will appear as below –

- Specify object from Source – Go to Data store “ds_ecc ” and Select table KNA1 and drag and drop to data flow blank screen as below screen-

- Specify object for Target- Select Data store “DS_BODS_HANA” from the repository and select table KNA1.

- Drag and drop to the workspace and select “Make Target “option. There will be two table for source and target. Here we will define the table as source and target.

- Query Transformation – This is a tool used to retrieve data based on input schema for user specific condition and for transport data from source to target.

- Select Query Transform icon from tool Palette, and drag and drop it between source and target object in workspace as below –

- Link Query object to Source.

- Link Query Object to Target table.

- Double Click On Query Icon. By this, we map a column from input schema to output schema.

When we click on Query icon, a next window for mapping will appear, in which we do the following steps –

- Source Table KNA1 is selected.

- Select all column from the source table and right click and select a map to output.

- Target Output selected as Query, and column will be mapped.

5. Save and Validate project.

1. Click on validate Icon.

2. A pop-up for validation success appear.

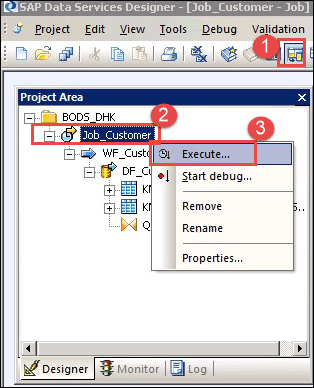

Step 11) Execute Job – To execute Job, follow the below path-

- Select Project Area icon to open Project, and select created Project.

- Select Job and right click.

- Select Execute option, to execute Job.

- After Executing Job, a Job Log window is displayed, in which all message regarding Job will be displayed.

- The last message will be Job < > is completed successfully.

Step 12) – Validate / Check Data in SAP HANA Database.

- Login to SAP HANA database through SAP HANA Studio, and select HANAUSER schema.

- Select KNA1 table in Table node.

- Right Click on table KNA1 and Select Open Data Preview.

- Table (KNA1) Data loaded by BODS processes as above will be displayed in data preview screen.