What is Integration Testing? (Example)

What is Integration Testing?

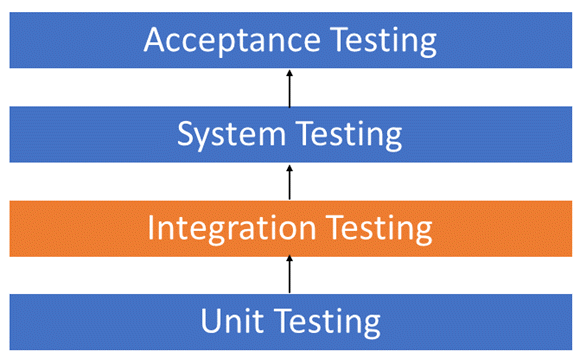

Integration Testing is defined as a type of testing where software modules are integrated logically and tested as a group. A typical software project consists of multiple software modules, coded by different programmers. The purpose of this level of testing is to expose defects in the interaction between these software modules when they are integrated

Integration Testing focuses on checking data communication amongst these modules. Hence it is also termed as ‘I & T’ (Integration and Testing), ‘String Testing’ and sometimes ‘Thread Testing’.

👉 Enroll for Free Live Integration Testing Project

When and Why to do Integration Testing?

Integration testing is applied after unit testing and before full system testing. It is most useful when verifying data flow, shared APIs, and interdependent modules across different environments. By running integration tests early, teams can uncover interface mismatches, missing data contracts, and dependency failures that unit tests often miss.

You should use integration testing when multiple modules or services must exchange data, when third-party integrations are involved, and whenever changes in one module could affect others. It reduces defect leakage, improves overall quality, and provides confidence that the system can function reliably before progressing to larger-scale testing or release.

Although each software module is unit tested, defects still exist for various reasons, like

- A Module, in general, is designed by an individual software developer whose understanding and programming logic may differ from those of other programmers. Integration Testing becomes necessary to verify that the software modules work in unity

- At the time of module development, there are wide chances of changes in requirements by the clients. These new requirements may not be unit tested, and hence system integration Testing becomes necessary.

- Interfaces of the software modules with the database could be erroneous

- External Hardware interfaces, if any, could be erroneous

- Inadequate exception handling could cause issues.

Click here if the video is not accessible

Example of Integration Test Case

Integration Test Case differs from other test cases in the sense that it focuses mainly on the interfaces & flow of data/information between the modules. Here, priority is to be given to the integrating links rather than the unit functions, which are already tested.

Sample Integration Test Cases for the following scenario: Application has 3 modules, say ‘Login Page’, ‘Mailbox’, and ‘Delete emails’, and each of them is integrated logically.

Here, do not concentrate much on the Login Page testing as it’s already been done in Unit Testing. But check how it’s linked to the Mail Box Page.

Similarly, Mail Box: Check its integration with the Delete Mails Module.

| Test Case ID | Test Case Objective | Test Case Description | Expected Result |

|---|---|---|---|

| 1 | Check the interface link between the Login and Mailbox module | Enter login credentials and click on the Login button | To be directed to the Mail Box |

| 2 | Check the interface link between the Mailbox and the Delete Mails Module | From Mailbox, select the email and click the delete button | Selected email should appear in the Deleted/Trash folder |

Best Integration Testing Tool

1) Testsigma

Testsigma is a cloud-based integration testing platform that I’ve found essential for automating interactions between services, APIs, and user interfaces in a unified environment. It’s specifically designed for teams who need to validate data consistency and behavioral accuracy when different application components work together, eliminating the complexity of managing fragmented testing approaches.

During my integration testing projects, I used Testsigma’s unified workflows to verify end-to-end data flow across backend services and frontend interfaces. The platform’s ability to combine API validations with UI checks in single test scenarios gave me confidence that component interactions remained stable, while centralized reporting helped me quickly identify and resolve integration failures before they impacted production.

Features:

- Unified API and UI Test Flows: This feature allows you to combine API calls, UI interactions, and validations within a single cohesive test scenario. It eliminates context switching between separate tools and ensures complete integration coverage. You can verify that backend responses correctly drive frontend behavior in real-world workflows. I use this to validate end-to-end data consistency across service boundaries efficiently.

- Advanced Parameterization and Data Handling: Testsigma provides flexible data management capabilities to test diverse integration scenarios with different inputs and conditions. You can externalize test data, reuse datasets across flows, and validate multiple integration paths. This feature supports dynamic data injection and environment-specific configurations. I found this particularly effective for covering edge cases and boundary conditions systematically.

- Multi-Layer Assertions and Validations: It enables comprehensive verification across API responses, database states, and UI elements within integrated test flows. You can assert on JSON payloads, HTTP status codes, database values, and visual components simultaneously. This feature ensures complete validation of integration points. I rely on it to catch subtle data transformation issues that single-layer testing might miss.

- Continuous Integration and Deployment Support: The platform integrates seamlessly with CI/CD pipelines to execute integration tests automatically on every build or deployment. You can configure triggers, webhooks, and scheduled runs to maintain continuous validation. It supports popular tools like Jenkins, GitLab, and Azure DevOps. I recommend leveraging this to detect integration regressions early in development cycles.

- Centralized Reporting and Failure Analysis: Testsigma generates detailed reports that highlight integration failures, their root causes, and downstream impacts across services. You can drill down into specific test steps, view request-response pairs, and trace data flow issues. This feature provides historical trends and comparison analytics. I’ve used it to accelerate debugging and coordinate fixes across distributed teams efficiently.

Pros

Cons

Pricing:

- Price: Custom pricing adjusted to integration test volume, environment needs, and team structure

- Free Trial: 14-Days Free Trial

14-Days Free Trial

Types of Integration Testing

Software Engineering defines a variety of strategies to execute Integration testing, viz.

- Big Bang Approach :

- Incremental Approach: which is further divided into the following

- Bottom Up Approach

- Top Down Approach

- Sandwich Approach – Combination of Top Down and Bottom Up

Below are the different strategies, the way they are executed and their limitations as well advantages.

Big Bang Testing

Big Bang Testing is an Integration testing approach in which all the components or modules are integrated together at once and then tested as a unit. This combined set of components is considered as an entity while testing. If all of the components in the unit are not completed, the integration process will not execute.

Advantages:

- Faster setup – All modules integrated in one go.

- Full system view – Observe overall behavior immediately.

- No stubs/drivers – Reduces extra development effort.

- Good for small projects – Simpler systems fit well.

- User-oriented – Matches end-user experience closely.

Disadvantages:

- Hard to debug – Failures harder to isolate.

- Late defect detection – Bugs found only after full integration.

- High risk – Major issues may block entire testing.

- Not scalable – Complex systems become unmanageable.

- Poor test coverage – Some modules tested insufficiently.

Incremental Testing

In the Incremental Testing approach, testing is done by integrating two or more modules that are logically related to each other and then testing for the proper functioning of the application. Then the other related modules are integrated incrementally, and the process continues until all the logically related modules are integrated and tested successfully.

Incremental Approach, in turn, is carried out by two different Methods:

- Bottom Up

- Top Down

- Sandwich Approach

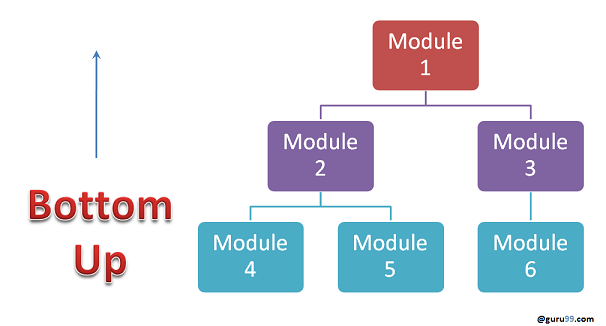

Bottom-up Integration Testing

Bottom-up Integration Testing is a strategy in which the lower-level modules are tested first. These tested modules are then further used to facilitate the testing of higher-level modules. The process continues until all modules at the top level are tested. Once the lower-level modules are tested and integrated, the next level of modules is formed.

Diagrammatic Representation:

Advantages:

- Early module testing – Lower-level modules tested first.

- Easier debugging – Defects isolated at module level.

- No stubs needed – Drivers are simpler to create.

- Reliable foundation – Core modules tested before higher levels.

- Progressive integration – System grows steadily with confidence.

Disadvantages:

- Late user view – Full system visible only at end.

- Needs drivers – Extra effort to build drivers.

- UI delayed – Top-level interfaces tested very late.

- Time-consuming – Progressive integration takes longer.

- Test gaps – High-level interactions may miss issues.

Top-down Integration Testing

Top-down integration Testing is a method in which integration testing takes place from top to bottom, following the control flow of the software system. The higher-level modules are tested first, and then the lower-level modules are tested and integrated in order to check the software functionality. Stubs are used for testing if some modules are not ready.

Advantages:

- Early user view – Interfaces tested from the start.

- Critical modules first – High-level logic validated early.

- Progressive integration – Issues caught step by step.

- No drivers needed – Only stubs required.

- Early design validation – Confirms system architecture quickly.

Disadvantages:

- Needs stubs – Writing many stubs adds effort.

- Lower modules delayed – Core modules tested later.

- Incomplete early tests – Missing details from unintegrated modules.

- Debugging harder – Errors may propagate from stubs.

- Time-consuming – Stub creation slows process.

Sandwich Testing

Sandwich Testing is a strategy in which top-level modules are tested with lower-level modules at the same time, lower modules are integrated with top modules, and tested as a system. It is a combination of Top-down and Bottom-up approaches; therefore, it is called Hybrid Integration Testing. It makes use of both stubs and drivers.

Advantages:

- Balanced approach – Combines top-down and bottom-up strengths.

- Parallel testing – Top and bottom modules tested simultaneously.

- Faster coverage – More modules tested earlier.

- Critical modules prioritized – Both high and low levels validated.

- Reduced risk – Issues detected from both ends.

Disadvantages:

- High complexity – Harder to plan and manage.

- Needs stubs/drivers – Extra effort for test scaffolding.

- Costly – More resources and time required.

- Middle modules delayed – Tested only after top and bottom.

- Not ideal for small systems – Overhead outweighs benefits.

What are Stubs and Drivers in Integration Testing?

Stubs and drivers are essential dummy programs that enable integration testing when not all modules are available simultaneously. These test doubles simulate missing components, allowing testing to proceed without waiting for complete system development.

What are Stubs?

Stubs are dummy modules that replace lower-level components not yet developed or integrated. They’re called by the module under test and return predefined responses. For example, when testing a payment processing module that needs tax calculation, a stub can return fixed tax values until the actual tax module is ready.

Characteristics of Stubs:

- Simulate lower-level module behavior

- Return hard-coded or simple calculated values

- Used in top-down integration testing

- Minimal functionality implementation

What are Drivers?

Drivers are dummy programs that call the module being tested, simulating higher-level components. They pass test data to lower-level modules and collect results. For instance, when testing a database module, a driver simulates the business logic layer, sending queries.

Characteristics of Drivers:

- Invoke modules under test with test data

- Capture and validate responses

- Used in bottom-up integration testing

- Control test execution flow

Practical Implementation Example

Payment Module Testing: - Stub: Simulates tax calculation service returning 10% tax - Driver: Simulates checkout process calling payment module - Result: Payment module tested independently of unavailable components

When to Use Each?

| Component | Use Stub | Use Driver |

|---|---|---|

| Testing Approach | Top-down testing | Bottom-up testing |

| Replaces | Lower-level modules | Higher-level modules |

| Function | Returns dummy data | Sends test data |

| Complexity | Simple responses | Test orchestration |

Stubs and drivers reduce testing dependencies, enable parallel development, and accelerate testing cycles by eliminating wait times for complete system availability.

How to do Integration Testing?

The Integration test procedure, irrespective of the Software testing strategies (discussed above):

- Prepare the Integration Tests Plan

- Design the Test Scenarios, Cases, and Scripts.

- Executing the test Cases followed by reporting the defects.

- Tracking & re-testing the defects.

- Steps 3 and 4 are repeated until the completion of Integration is successful.

Brief Description of Integration Test Plans

It includes the following attributes:

- Methods/Approaches to testing (as discussed above).

- Scopes and Out of Scope Items of Integration Testing.

- Roles and Responsibilities.

- Pre-requisites for Integration testing.

- Testing environment.

- Risk and Mitigation Plans.

What are the Entry and Exit Criteria of Integration Testing?

Entry and exit criteria define clear checkpoints for starting and completing integration testing, ensuring systematic progress through the testing lifecycle while maintaining quality standards.

Entry Criteria:

- Unit Tested Components/Modules

- All high-prioritized bugs fixed and closed

- All Modules to be code-completed and integrated successfully.

- Integration tests Plan, test case, scenarios to be signed off and documented.

- Required Test Environment to be set up for Integration testing

Exit Criteria:

- Successful Testing of Integrated Application.

- Executed Test Cases are documented

- All high-prioritized bugs fixed and closed

- Technical documents to be submitted, followed by release Notes.

How would you Design Integration Test Cases?

A strong integration test validates how modules exchange data in real workflows. Below is an example of a user login flow that integrates UI, API, and database layers:

| Step | Input | Expected Outcome |

|---|---|---|

| 1 | User enters valid credentials on the login screen | Credentials sent securely to the authentication API |

| 2 | API validates credentials against the database | Database confirms match for username/password |

| 3 | API returns an authentication token | Token generated and sent back to the application |

| 4 | UI redirects the user to the dashboard | User session established successfully |

This simple flow confirms communication across three critical modules: UI → API → Database. A failed step indicates exactly where integration breaks, helping teams isolate defects faster than system-level testing alone.

Best Practices/ Guidelines for Integration Testing

- First, determine the Integration Test Strategy that could be adopted, and later prepare the test cases and test data accordingly.

- Study the Architecture design of the Application and identify the Critical Modules. These need to be tested on priority.

- Obtain the interface designs from the Architectural team and create test cases to verify all of the interfaces in detail. Interface to database/external hardware/software application must be tested in detail.

- After the test cases, it’s the test data that plays the critical role.

- Always have the mock data prepared prior to executing. Do not select test data while executing the test cases.

Common Challenges and Solutions

Integration testing presents unique obstacles that can impact project timelines and quality. Here are the most critical challenges and their practical solutions.

1. Complex Dependencies Management

Challenge: Multiple module dependencies create intricate testing scenarios with cascading failures.

Solution: Use dependency injection, containerization (Docker), and test in incremental layers. Document all interconnections in dependency matrices.

2. Incomplete Modules

Challenge: Testing is blocked when dependent modules aren’t ready.

Solution: Develop comprehensive stubs/drivers early, use service virtualization (WireMock), and implement contract testing with well-defined interfaces.

3. Test Data Management

Challenge: Maintaining consistent, realistic test data across systems.

Solution: Implement automated test data generation, use database snapshots for quick resets, and version control test data alongside test cases.

4. Environment Configuration

Challenge: Inconsistent environments cause integration failures.

Solution: Use Infrastructure as Code (IaC), containerization for environment parity, and configuration management tools like Ansible.

5. Debugging Integration Failures

Challenge: Identifying root causes across multiple components is complex.

Solution: Implement comprehensive logging, use distributed tracing (Jaeger/Zipkin), and add correlation IDs to track requests across services.

6. Third-Party Service Integration

Challenge: External service unavailability or API changes disrupt testing.

Solution: Mock external services (Postman Mock Server), implement retry mechanisms, and maintain API version compatibility testing.

7. Performance Bottlenecks

Challenge: Integration points become bottlenecks under load.

Solution: Conduct early performance profiling, implement caching strategies, and use asynchronous communication where appropriate.

FAQs

Summary

Integration testing ensures that individual software modules work together seamlessly, validating data flow and interactions across components. Positioned between unit and system testing, it identifies issues that isolated tests often miss, reducing risks before release.

Different approaches—such as Big-Bang, Top-Down, Bottom-Up, and Sandwich—allow teams to adapt testing to project size and complexity. Choosing the right strategy helps balance speed, coverage, and defect isolation.

Modern tools, automation, and CI/CD integration make integration testing scalable and efficient. Despite challenges like environment mismatches or unstable dependencies, disciplined practices and careful planning ensure reliable, high-quality software delivery.