PyTorch Transfer Learning Tutorial with Examples

What is Transfer Learning?

Transfer Learning is a technique of using a trained model to solve another related task. It is a Machine Learning research method that stores the knowledge gained while solving a particular problem and use the same knowledge to solve another different yet related problem. This improves efficiency by reusing the information gathered from the previously learned task.

It’s popular to use other network model weight to reduce your training time because you need a lot of data to train a network model. To reduce the training time, you use other networks and its weight and modify the last layer to solve our problem. The advantage is you can use a small dataset to train the last layer.

Next in this PyTorch Transfer learning tutorial, we will learn how to use Transfer Learning with PyTorch.

Loading Dataset

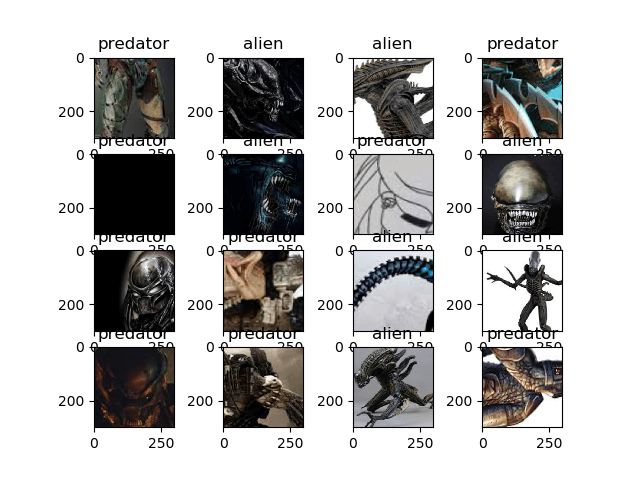

Source: Alien vs. Predator Kaggle

Before you start using Transfer Learning PyTorch, you need to understand the dataset that you are going to use. In this Transfer Learning PyTorch example, you will classify an Alien and a Predator from nearly 700 images. For this technique, you don’t really need a big amount of data to train. You can download the dataset from Kaggle: Alien vs. Predator.

How to Use Transfer Learning?

Here is a step by step process on how to use Transfer Learning for Deep Learning with PyTorch:

Step 1) Load the Data

The first step is to load our data and do some transformation to images so that they matched the network requirements.

You will load the data from a folder with torchvision.dataset. The module will iterate in the folder to split the data for train and validation. The transformation process will crop the images from the center, perform a horizontal flip, normalize, and finally convert it to tensor using Deep Learning.

from __future__ import print_function, division

import os

import time

import torch

import torchvision

from torchvision import datasets, models, transforms

import torch.optim as optim

import numpy as np

import matplotlib.pyplot as plt

data_dir = "alien_pred"

input_shape = 224

mean = [0.5, 0.5, 0.5]

std = [0.5, 0.5, 0.5]

#data transformation

data_transforms = {

'train': transforms.Compose([

transforms.CenterCrop(input_shape),

transforms.ToTensor(),

transforms.Normalize(mean, std)

]),

'validation': transforms.Compose([

transforms.CenterCrop(input_shape),

transforms.ToTensor(),

transforms.Normalize(mean, std)

]),

}

image_datasets = {

x: datasets.ImageFolder(

os.path.join(data_dir, x),

transform=data_transforms[x]

)

for x in ['train', 'validation']

}

dataloaders = {

x: torch.utils.data.DataLoader(

image_datasets[x], batch_size=32,

shuffle=True, num_workers=4

)

for x in ['train', 'validation']

}

dataset_sizes = {x: len(image_datasets[x]) for x in ['train', 'validation']}

print(dataset_sizes)

class_names = image_datasets['train'].classes

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

Let’s visualize our dataset for PyTorch Transfer Learning. The visualization process will get the next batch of images from the train data-loaders and labels and display it with matplot.

images, labels = next(iter(dataloaders['train'])) rows = 4 columns = 4 fig=plt.figure() for i in range(16): fig.add_subplot(rows, columns, i+1) plt.title(class_names[labels[i]]) img = images[i].numpy().transpose((1, 2, 0)) img = std * img + mean plt.imshow(img) plt.show()

Step 2) Define Model

In this Deep Learning process, you will use ResNet18 from torchvision module.

You will use torchvision.models to load resnet18 with the pre-trained weight set to be True. After that, you will freeze the layers so that these layers are not trainable. You also modify the last layer with a Linear layer to fit with our needs that is 2 classes. You also use CrossEntropyLoss for multi-class loss function and for the optimizer you will use SGD with the learning rate of 0.0001 and a momentum of 0.9 as shown in the below PyTorch Transfer Learning example.

## Load the model based on VGG19 vgg_based = torchvision.models.vgg19(pretrained=True) ## freeze the layers for param in vgg_based.parameters(): param.requires_grad = False # Modify the last layer number_features = vgg_based.classifier[6].in_features features = list(vgg_based.classifier.children())[:-1] # Remove last layer features.extend([torch.nn.Linear(number_features, len(class_names))]) vgg_based.classifier = torch.nn.Sequential(*features) vgg_based = vgg_based.to(device) print(vgg_based) criterion = torch.nn.CrossEntropyLoss() optimizer_ft = optim.SGD(vgg_based.parameters(), lr=0.001, momentum=0.9)

The output model structure

VGG( (features): Sequential( (0): Conv2d(3, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (1): ReLU(inplace) (2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (3): ReLU(inplace) (4): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (5): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (6): ReLU(inplace) (7): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (8): ReLU(inplace) (9): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (10): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (11): ReLU(inplace) (12): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (13): ReLU(inplace) (14): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (15): ReLU(inplace) (16): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (17): ReLU(inplace) (18): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (19): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (20): ReLU(inplace) (21): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (22): ReLU(inplace) (23): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (24): ReLU(inplace) (25): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (26): ReLU(inplace) (27): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) (28): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (29): ReLU(inplace) (30): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (31): ReLU(inplace) (32): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (33): ReLU(inplace) (34): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)) (35): ReLU(inplace) (36): MaxPool2d(kernel_size=2, stride=2, padding=0, dilation=1, ceil_mode=False) ) (classifier): Sequential( (0): Linear(in_features=25088, out_features=4096, bias=True) (1): ReLU(inplace) (2): Dropout(p=0.5) (3): Linear(in_features=4096, out_features=4096, bias=True) (4): ReLU(inplace) (5): Dropout(p=0.5) (6): Linear(in_features=4096, out_features=2, bias=True) ) )

Step 3) Train and Test Model

We will use some of the functions from Transfer Learning PyTorch Tutorial to help us train and evaluate our model.

def train_model(model, criterion, optimizer, num_epochs=25):

since = time.time()

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

#set model to trainable

# model.train()

train_loss = 0

# Iterate over data.

for i, data in enumerate(dataloaders['train']):

inputs , labels = data

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

with torch.set_grad_enabled(True):

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

train_loss += loss.item() * inputs.size(0)

print('{} Loss: {:.4f}'.format(

'train', train_loss / dataset_sizes['train']))

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(

time_elapsed // 60, time_elapsed % 60))

return model

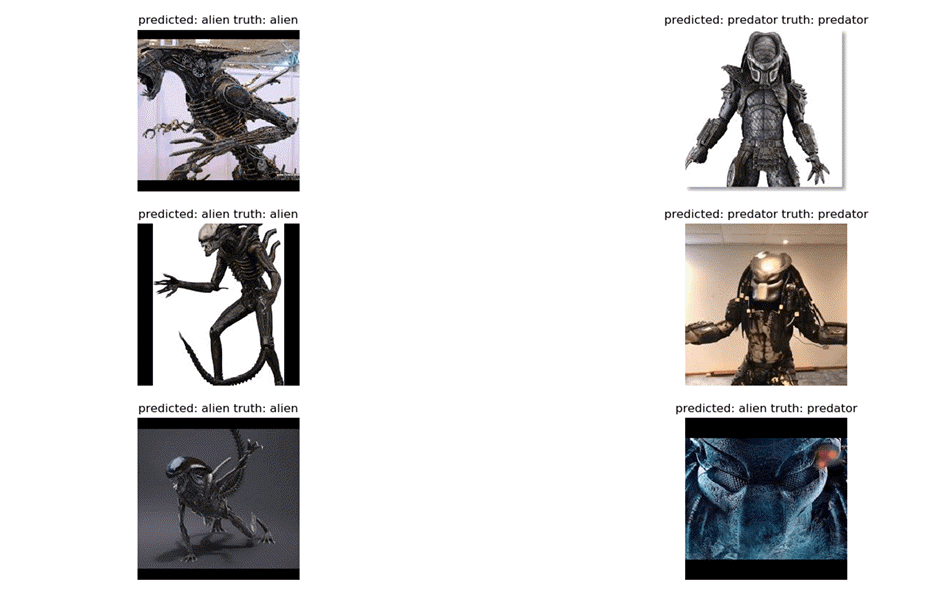

def visualize_model(model, num_images=6):

was_training = model.training

model.eval()

images_so_far = 0

fig = plt.figure()

with torch.no_grad():

for i, (inputs, labels) in enumerate(dataloaders['validation']):

inputs = inputs.to(device)

labels = labels.to(device)

outputs = model(inputs)

_, preds = torch.max(outputs, 1)

for j in range(inputs.size()[0]):

images_so_far += 1

ax = plt.subplot(num_images//2, 2, images_so_far)

ax.axis('off')

ax.set_title('predicted: {} truth: {}'.format(class_names[preds[j]], class_names[labels[j]]))

img = inputs.cpu().data[j].numpy().transpose((1, 2, 0))

img = std * img + mean

ax.imshow(img)

if images_so_far == num_images:

model.train(mode=was_training)

return

model.train(mode=was_training)

Finally in this Transfer Learning in PyTorch example, let’s start our training process with the number of epochs set to 25 and evaluate after the training process. At each training step, the model will take the input and predict the output. After that, the predicted output will be passed to the criterion to calculate the losses. Then the losses will perform a backprop calculation to calculate the gradient and finally calculating the weights and optimize the parameters with autograd.

At the visualize model, the trained network will be tested with a batch of images to predict the labels. Then it will be visualized with the help of matplotlib.

vgg_based = train_model(vgg_based, criterion, optimizer_ft, num_epochs=25) visualize_model(vgg_based) plt.show()

Step 4) Results

The final result is that you achieved an accuracy of 92%.

Epoch 23/24 ---------- train Loss: 0.0044 train Loss: 0.0078 train Loss: 0.0141 train Loss: 0.0221 train Loss: 0.0306 train Loss: 0.0336 train Loss: 0.0442 train Loss: 0.0482 train Loss: 0.0557 train Loss: 0.0643 train Loss: 0.0763 train Loss: 0.0779 train Loss: 0.0843 train Loss: 0.0910 train Loss: 0.0990 train Loss: 0.1063 train Loss: 0.1133 train Loss: 0.1220 train Loss: 0.1344 train Loss: 0.1382 train Loss: 0.1429 train Loss: 0.1500 Epoch 24/24 ---------- train Loss: 0.0076 train Loss: 0.0115 train Loss: 0.0185 train Loss: 0.0277 train Loss: 0.0345 train Loss: 0.0420 train Loss: 0.0450 train Loss: 0.0490 train Loss: 0.0644 train Loss: 0.0755 train Loss: 0.0813 train Loss: 0.0868 train Loss: 0.0916 train Loss: 0.0980 train Loss: 0.1008 train Loss: 0.1101 train Loss: 0.1176 train Loss: 0.1282 train Loss: 0.1323 train Loss: 0.1397 train Loss: 0.1436 train Loss: 0.1467 Training complete in 2m 47s

End then the output of our model will be visualized with matplot below:

Summary

So, let’s summarize everything! The first factor is PyTorch is a growing deep learning framework for beginners or for research purposes. It offers high computation time, Dynamic Graph, GPUs support and it’s totally written in Python. You are able to define your own network module with ease and do the training process with an easy iteration. It’s clear that PyTorch is ideal for beginners to find out deep learning and for professional researchers it’s very useful with faster computation time and also the very helpful autograd function to assist dynamic graph.